Recently, Microsoft announced a new shift in the AI landscape—Autonomous Agents. Alongside this, they introduced the first-ever Business Central Sales Order Agent.

In the current blog, I’ll not talk about the concepts of Agents, their pros and cons. It’s still a very early stage of the tech, and requires some time to try and feel, first.

However, one crucial aspect of this story—the one essential for Agents to operate effectively—is the availability of tools. The core idea behind Agents is their ability to take action on behalf of the user. For this to be possible, the necessary actions must be available. We can refer to these actions as tools or functions.

In my previous blog Copilot Toolkit: Function Calling, I demonstrated the power of this technology. With function calling, you can have the Copilot execute specific functions or APIs based on user inputs, transforming natural language requests into actions. If you’re new to this topic, I recommend reading that blog first.

With the release of Business Central 25.0, new features have been added to function calling in the Copilot Toolkit:

- Auto tools invocation

- Parallel function calling

These updates weren’t highlighted with a major announcement, just a few mentions in a video. Yet, I believe they deserve more attention as they pave the way for future Autonomous Agents.

Auto Tools Invocation

In Business Central 24.2, the Copilot Toolkit allowed you to specify the functions accessible to the Copilot. For example, when a user asked, “What hotels are available in Antwerp in June 2024 for less than $250 per night?” you could detect this intent and run an internal AL function:

FindAvailableHotels(City: Text; MinPrice: Decimal; FromDate: Date; ToDate: Date):

Now, as a developer, what do you expect as output from this function? A filtered Hotels table based on the input parameters, right? But what would the user expect as an answer? Likely, a neatly formatted list of hotels, each with a brief summary.

In the Generate Final Answer section of my previous blog, I explained how to handle this manually. It was possible, but it required extra coding to include the function’s output in the chat history and call Azure OpenAI again.

// Generate Final Answer after Function Execution in BC 24.2

AOAIChatMessages.ClearTools();

AOAIChatMessages.AddToolMessage(AOAIFunctionResponse.GetFunctionId(), AOAIFunctionResponse.GetFunctionName(), AOAIFunctionResponse.GetResult());

AzureOpenAI.GenerateChatCompletion(AOAIChatMessages, AOAIChatCompletionParams, AOAIOperationResponse);

if not AOAIOperationResponse.IsSuccess() then

Error(AOAIOperationResponse.GetError());

exit(AOAIChatMessages.GetLastMessage());

Now, in BC 25.0, this has been simplified to just one line of code:

AOAIChatMessages.SetToolInvokePreference(Enum::"AOAI Tool Invoke Preference"::Automatic);

Let’s update the original Hotels Booking Copilot code to take advantage of this.

Pretty impressive, right? Now let’s look at the results in Business Central.

The Key Takeaway

Instead of building the response manually, we now let the Copilot handle it with just one line of code. The response feels more personalized and visually appealing (you can ask the Copilot to respond in HTML for better formatting in an AL RichContent field).

The Final Code

Here’s the refined AL code to:

- Identify user intent

- Select the appropriate function

- Execute the function

- Generate the final answer

procedure GetAnswer(Question: Text; var Answer: Text)

var

// Azure OpenAI

AzureOpenAI: Codeunit "Azure OpenAi";

AOAIChatCompletionParams: Codeunit "AOAI Chat Completion Params";

AOAIChatMessages: Codeunit "AOAI Chat Messages";

AOAIOperationResponse: Codeunit "AOAI Operation Response";

AOAIDeployments: codeunit "AOAI Deployments";

// Tools

GetHotelInfo: Codeunit "GPT GetHotelInfo";

GetAvailabilityByCity: Codeunit "GPT GetAvailabilityByCity";

begin

if not AzureOpenAI.IsEnabled(Enum::"Copilot Capability"::"GPT Booking Copilot") then

exit;

AzureOpenAI.SetCopilotCapability(Enum::"Copilot Capability"::"GPT Booking Copilot");

AzureOpenAI.SetAuthorization(Enum::"AOAI Model Type"::"Chat Completions", AOAIDeployments.GetGPT35TurboLatest());

AOAIChatCompletionParams.SetTemperature(0);

AOAIChatMessages.AddTool(GetHotelInfo);

AOAIChatMessages.AddTool(GetAvailabilityByCity);

AOAIChatMessages.SetToolInvokePreference(Enum::"AOAI Tool Invoke Preference"::Automatic);

AOAIChatMessages.SetToolChoice('auto');

AOAIChatMessages.SetPrimarySystemMessage(GetSystemMetaprompt());

AOAIChatMessages.AddUserMessage(Question);

AzureOpenAI.GenerateChatCompletion(AOAIChatMessages, AOAIChatCompletionParams, AOAIOperationResponse);

if not AOAIOperationResponse.IsSuccess() then

Error(AOAIOperationResponse.GetError());

Answer := AOAIChatMessages.GetLastMessage();

end;

Invocation Preferences

Three invocation methods are available:

- Automatic – Automatically invoke the tool calls returned from the LLM and send them back until no more tool calls are required. This method is ideal if you always want AI to generate the final answer.

- Invoke Tools Only – Executes the tool calls from the LLM without sending the results back. This was available in version 24.2 and is suitable when you prefer to manually generate the final answer or run other business logic based on the function results.

- Manual – Requires manual invocation of tool calls, with no results appended to the chat history. Use this if you want the LLM to suggest functions but handle execution and response building manually.

Parallel function calling

Another enhancement in the Copilot Toolkit v25.0 is parallel function calling. Instead of selecting a single function to resolve user requests, Copilot can now execute multiple functions in parallel and use their combined results to generate a final answer.

This is not a sequential execution, where one function’s output becomes the input for another.

Let’s revisit our hotel booking Copilot with a slightly different request:

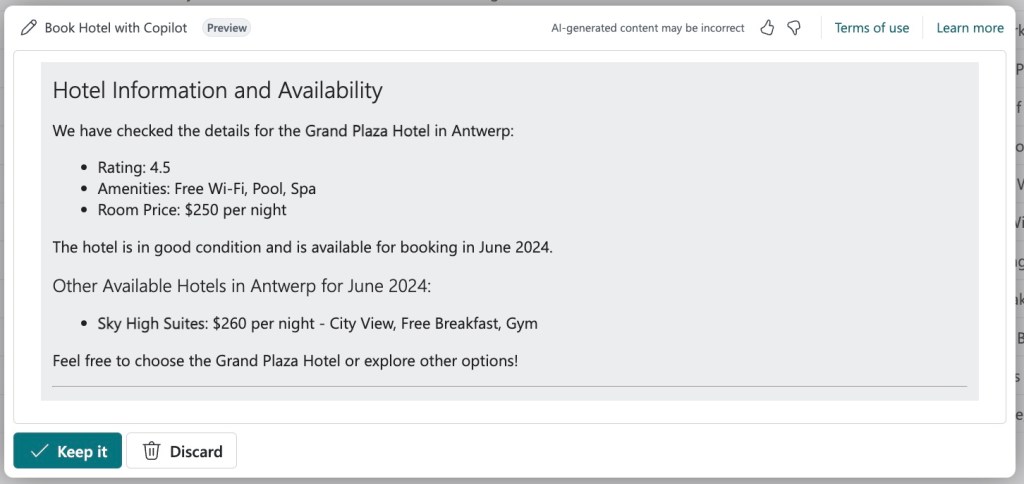

“Hey, last year during my holiday in Antwerp I stayed at Grand Plaza. Can you check if it’s still in good condition and available for June 2024?”

With Invoke Tools Only

To leverage parallel function calling while using the Invoke Tools Only preference, allowing automatic function execution but manual use of their results, use the following code:

procedure GetAnswer(Question: Text; var Answer: Text)

var

AzureOpenAI: Codeunit "Azure OpenAi";

AOAIChatCompletionParams: Codeunit "AOAI Chat Completion Params";

AOAIChatMessages: Codeunit "AOAI Chat Messages";

GetHotelInfo: Codeunit "GPT GetHotelInfo";

GetAvailabilityByCity: Codeunit "GPT GetAvailabilityByCity";

AOAIOperationResponse: Codeunit "AOAI Operation Response";

AOAIFunctionResponses: List of [Codeunit "AOAI Function Response"];

AOAIFunctionResponse: Codeunit "AOAI Function Response";

AOAIDeployments: codeunit "AOAI Deployments";

begin

if not AzureOpenAI.IsEnabled(Enum::"Copilot Capability"::"GPT Booking Copilot") then

exit;

AzureOpenAI.SetCopilotCapability(Enum::"Copilot Capability"::"GPT Booking Copilot");

AzureOpenAI.SetAuthorization(Enum::"AOAI Model Type"::"Chat Completions", GetEndpoint(), GetDeployment(), GetApiKey());

AOAIChatCompletionParams.SetTemperature(0);

AOAIChatMessages.AddTool(GetHotelInfo);

AOAIChatMessages.AddTool(GetAvailabilityByCity);

AOAIChatMessages.SetToolInvokePreference(Enum::"AOAI Tool Invoke Preference"::"Invoke Tools Only");

AOAIChatMessages.SetToolChoice('auto');

AOAIChatMessages.SetPrimarySystemMessage(GetSystemMetaprompt());

AOAIChatMessages.AddUserMessage(Question);

AzureOpenAI.GenerateChatCompletion(AOAIChatMessages, AOAIChatCompletionParams, AOAIOperationResponse);

if not AOAIOperationResponse.IsSuccess() then

Error(AOAIOperationResponse.GetError());

AOAIFunctionResponses := AOAIOperationResponse.GetFunctionResponses();

foreach AOAIFunctionResponse in AOAIFunctionResponses do begin

if AOAIFunctionResponse.IsSuccess() then

Answer += Format(AOAIFunctionResponse.GetResult());

end;

if AOAIFunctionResponses.Count() = 0 then

Answer := AOAIChatMessages.GetLastMessage();

end;

In this case, the user will see a less polished answer—essentially the raw results from each function.

With Automatic Invocation Preference

The best part?

When using the Automatic Invocation preference, you don’t need to modify this code. It works seamlessly.

I only added the following to the system prompt to highlight parallel function calling:

“At the very end, add System Information – list of tools and parameters used to get the answer.”

Once again, with this automatic invoke preference code you don’t need to do anything to achieve this. It will just work.

Why It's Important

Parallel function calling, combined with automatic answer generation, unlocks many possibilities for the future of autonomous agents. The best part is that we can use these capabilities now with custom Copilots. They become more user-focused, feels more personalized and as a top cherry, require less code to implement. A fantastic addition!